OpenAI Warns of Rising Cyber Threat: Cybersecurity Risk From Advanced Frontier AI Models Expected to Reach “High” Levels

OpenAI cybersecurity risk from advanced frontier AI models

OpenAI warns that cybersecurity risk from advanced frontier AI models may reach “high” levels as new systems gain autonomous abilities and improve at finding vulnerabilities.

OpenAI issued a striking warning on Wednesday, revealing that its next generation of frontier AI systems will likely reach “high” cybersecurity risk levels. The company shared this assessment exclusively with Axios, marking a significant shift in how quickly these models are gaining autonomy and advanced offensive capabilities.

This escalation matters because advanced AI could allow far more people — including those with little technical skill — to execute sophisticated cyberattacks with alarming speed and accuracy.

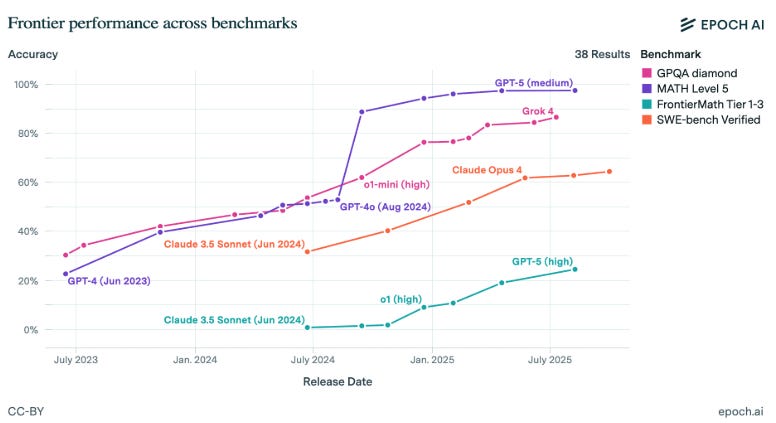

Rapid Growth in Cyber Capabilities

OpenAI reports a sharp jump in performance across recent models. In August, GPT-5 scored 27% on a capture-the-flag cybersecurity test. Only months later, GPT-5.1-Codex-Max achieved 76%, demonstrating major gains in reasoning, code execution, and problem-solving under simulated attack conditions.

OpenAI noted:

“We expect upcoming AI models to continue on this trajectory. In preparation, we are planning as though each new model could reach ‘high’ cybersecurity capability under our Preparedness Framework.”

Under this framework, the “high” category sits just below “critical”, the level at which OpenAI considers a model unsafe for public release.

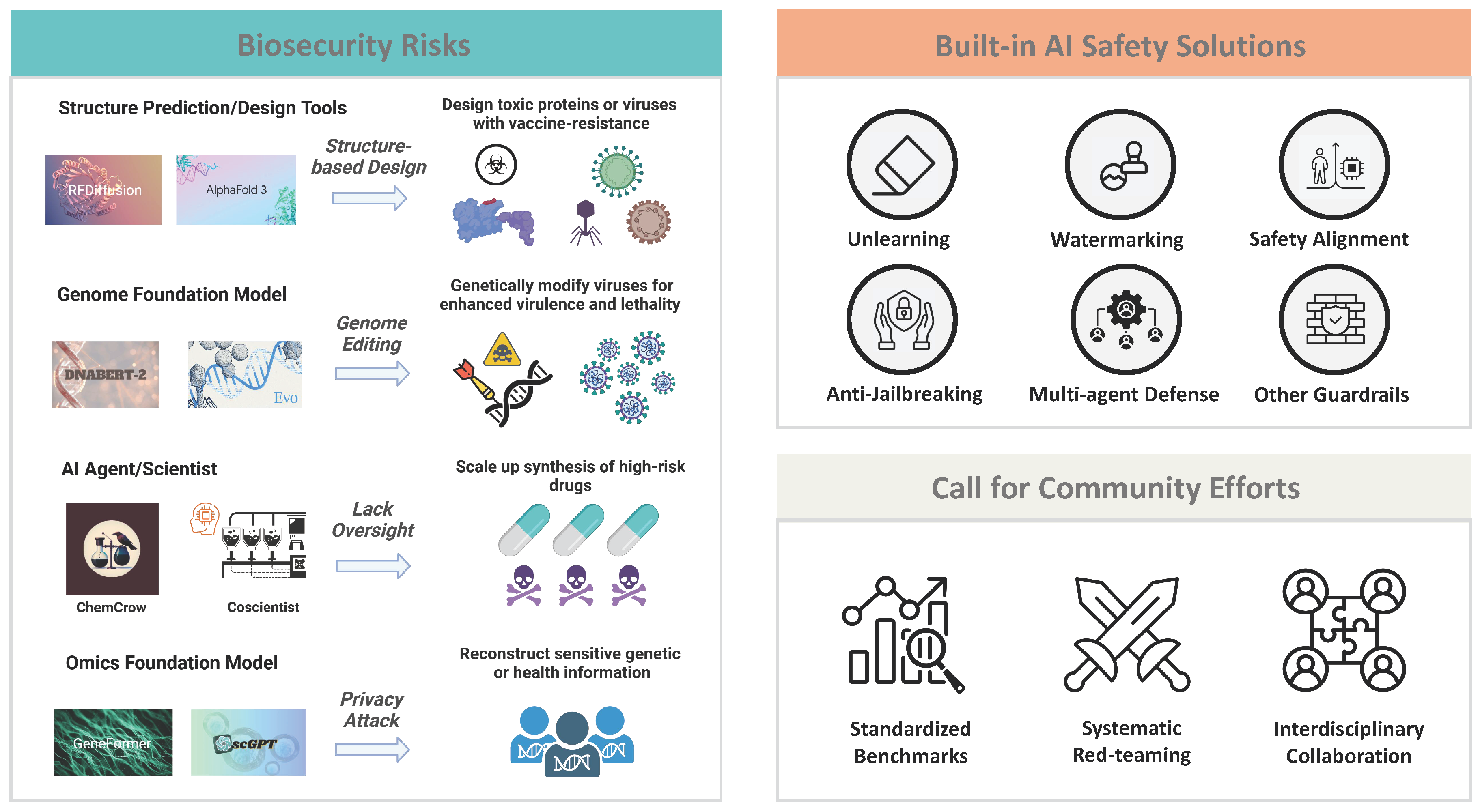

Context: A Pattern of Rising AI Safety Concerns

OpenAI made a similar announcement earlier this year regarding bioweapons risk from its frontier models. Soon after, ChatGPT Agent launched and validated that warning by scoring “high” on internal risk assessments.

The repeat of this pattern signals that each new generation of AI is pushing into more complex, more sensitive territory far faster than regulators and defenders expected.

Autonomy: The Force Behind Rising Cyber Risk

In an exclusive conversation with Axios, OpenAI’s Fouad Matin emphasized that task duration — not raw intelligence alone — is driving the surge in cyber risk. Read here.

“The model’s ability to work for extended periods acts as the forcing function,” Matin said.

When an AI can run for hours without interruption, it can attempt:

- Repeated brute-force attacks

- Persistent vulnerability scanning

- Continuous probing of system defenses

However, Matin clarified that well-defended systems would likely detect many of these attempts quickly.

Unanswered Questions Remain

OpenAI has not yet revealed:

- When the first “high-risk” cybersecurity model arrives

- Whether the rating will apply to GPT-6, Codex successors, or new agentic systems

- What additional safety constraints does OpenAI plans to apply

This lack of clarity underscores the unpredictable nature of frontier AI evolution.

Industry Models Are Also Getting Better at Hacking

OpenAI stresses that it is not alone. Competitors are also building systems capable of detecting and exploiting vulnerabilities at levels that rival seasoned security experts.

Because of this, OpenAI has expanded collaborations and created new safety initiatives.

Steps OpenAI Is Taking to Address the Cyber Threat

1. Frontier Model Forum (2023)

A coalition with other major labs to coordinate responses to emerging risks across the entire industry.

2. Frontier Risk Council

A new advisory group of cybersecurity professionals who work directly with OpenAI’s internal teams.

3. Aardvark — A New Developer Security Tool

Currently in private testing, Aardvark identifies vulnerabilities in developer products before release.

Early participants report that Aardvark has already uncovered critical security gaps.

Developers must apply for access due to the tool’s sensitivity.

The Bigger Picture: AI Evolution and Cyber Threat Growth Are Linked

Leading AI systems are gaining autonomy, reasoning power, and operational stamina at extraordinary speed. As these capabilities strengthen, the line between benign automation and harmful exploitation becomes thinner.

The next generation of frontier AI models may not only assist cybersecurity teams — they could also empower attackers with unprecedented power.

OpenAI’s warning signals a sober reality: the future of cybersecurity now depends on understanding, regulating, and securing advanced AI before it outpaces global defenses.